Google is pushing forward with its AI ambitions, rolling out experimental versions of its Gemini 2.0 models to a broader audience. After last week’s announcement, users on Android and iOS can now access Gemini 2.0 Flash Thinking Experimental and 2.0 Pro Experimental directly within the Gemini app.

A Closer Look at Gemini 2.0 Flash Thinking Experimental

The standard Gemini 2.0 Flash remains Google’s default AI model, focusing on speed and efficiency. However, the experimental Flash Thinking variant adds a new dimension—transparency in AI reasoning. This model doesn’t just provide answers; it gives a step-by-step breakdown of its thought process.

So what exactly does this mean? After entering a prompt, users will see Gemini cycle through a structured reasoning process:

- Show thinking – Displays the AI’s internal process.

- Identify the question’s scope – Determines the boundaries of the query.

- Recognize different perspectives – Considers alternative viewpoints.

- Brainstorm key concepts and related questions – Maps out potential directions for answering.

- Structure the answer – Organizes information logically.

- Refine and elaborate – Expands on the response with depth.

- Consider nuance and caveats – Adds complexity and considerations.

- Review and edit – Finalizes the response for clarity.

This level of transparency is a major step forward, particularly for those who want to understand how AI generates responses rather than just seeing an output. Google is clearly positioning this model as a more interactive and explainable AI.

Real-Time AI Responses and Mobile Functionality

The mobile experience with Gemini 2.0 Flash Thinking Experimental introduces a “Thoughts” section, which precedes the standard AI response. This allows users to witness the AI’s reasoning unfold in real time before receiving the final answer.

One standout feature is the speed at which responses appear. Built on the efficiency of the standard Gemini 2.0 Flash model, text streams in real time—often faster than users can read. While this rapid output is impressive, it also raises usability questions. Will users be able to absorb the AI’s thought process effectively? Time will tell.

However, as a preview model, it does come with limitations:

- No real-time information access – Unlike some AI models that fetch up-to-date web data, this version is working with pre-existing knowledge.

- Limited Gemini features – Some functionalities available in other versions of Gemini are missing here.

Reasoning Across Google Apps

Google isn’t stopping at standalone AI chat. The company is also integrating Gemini 2.0 Flash Thinking Experimental into its suite of apps, including YouTube, Maps, and Search.

This means the AI can assist with more complex, multi-step reasoning tasks across different Google services. For example, users might ask Gemini for travel recommendations based on Google Maps data, generate research insights using Search, or analyze video content from YouTube in more detail.

The key advantage? Context-awareness. Instead of treating each query as an isolated request, Gemini can draw connections across platforms, offering richer and more relevant insights.

Who Can Access These Experimental Models?

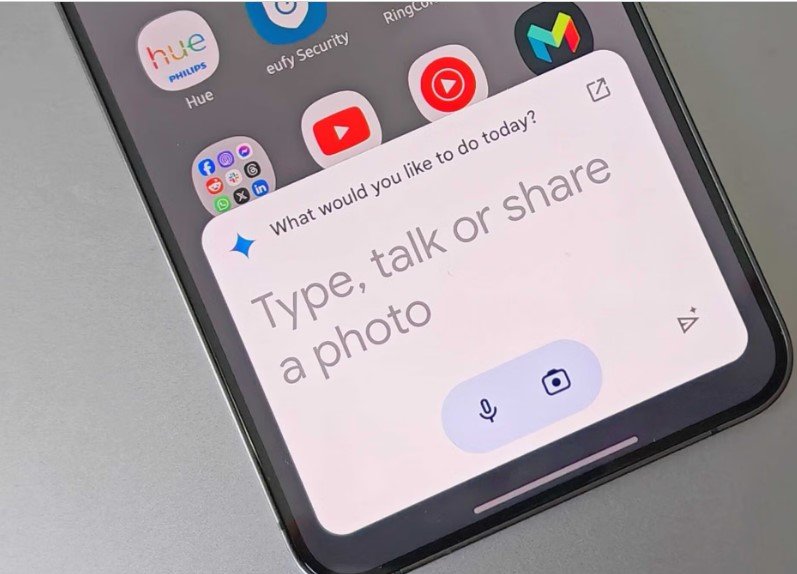

For now, Google is making both Gemini 2.0 Flash Thinking Experimental and 2.0 Pro Experimental available to all users for free. As of today, a broad rollout is in progress, with the update landing in Gemini’s Android and iOS apps.

This expansion signals Google’s intent to refine its AI models through large-scale user feedback. By letting everyday users interact with these experimental versions, Google gains valuable insights into how people engage with AI-driven reasoning. That data, in turn, will shape future improvements and refinements.

Google’s move also underscores the company’s broader AI strategy—making its models more accessible, explainable, and seamlessly integrated into everyday digital experiences.